I think I’ve managed to oversample the volume. Although, it seems that whenever I try to do so, the panels turn blank on a relatively strong computer but lags my computer intensely when I use a weaker computer. Is it possible to create the segmentation mask then oversample the volume? Thanks.

It is recommended to also always crop the volume to the minimum necessary size when you oversample, to keep the memory usage reasonable - using Crop volume.

You can also conserve memory by casting the volume to use the smallest necessary scalar type - using Cast scalar volume. For example, if you store your voxels as double-precision float then it takes 8 bytes, while if you use a short int then it just uses 2 bytes.

Segmentation will internally create a resampled copy of the master volume if you use any operation that relies on image intensity, so you will not save much memory this way.

Somehow I’m still getting blank panels after I’ve cropped the volume significantly.

I’ve tried this on several different computers and I’m getting the same results.

Cropped ROI

Volume after oversampling by a ratio of 2

Which Slicer version do you use?

Is the volume under a transform? (if you are not sure, attach a screenshot of Data module)

What are the dimensions and scalar type of the original volume? (if you are not sure, attach a screenshot of Volumes module, with Volume information section open)

I’m using 4.11.20200930 and I’m getting similar results from the unstable version 4.13

Window from Data module

Window from Volume modules

the actual volume is proportionally smaller by scale of 100.

Do I need to convert the volume to label map?

Assuming dimensions reported above are before cropping/oversampling, your volume is about 256MB in size. If you are cropping with option 0.5, the volume of your data is going to increase 8 folds to 2GB. And if you checked the ‘isotropic option’, then then the data volume will increase almost 100 folds (to about 25GB) which may cause slowdown on your computer with lower memory since the Slicer would need to use virtual memory.

The volume is not transformed (good), not very big (good). However, the volume is very sparse (voxel size is 1x1x20, which is a shape like a needle, instead of an ideal cube shape) and the scalar range is quite unusual, too (normally we have a range of a few thousands). Despite these unusual properties, the volume should be still usable, but some default processing parameters may need to be adjusted. For example, as @muratmaga indicated above, if you are not careful then you can end up with an enormous volume.

Can you show this Volume Information section for the cropped volume?

It would be also useful to see the image histogram (bottom section in Volumes module) for both the original and cropped volume.

Here is the image histogram:

The cropped volume size:

Thanks all of you. Really appreciate the help.

Here’s an update of what I’ve tried.

The switching to older versions of 3D Slicer doesn’t seem to cut it. Still getting the same results.

I’ve also tried using the sample data: SampleData - Slicer Wiki

I’ve tested the oversampling on MR-head, CT-chest, and DTI-Brain and I’m still getting the same.

I think I might be skipping some preliminary steps before I can oversample. I’m also noticing that I am not able to oversample the volume if the volume has been selected on drop down of “Master Volume”.

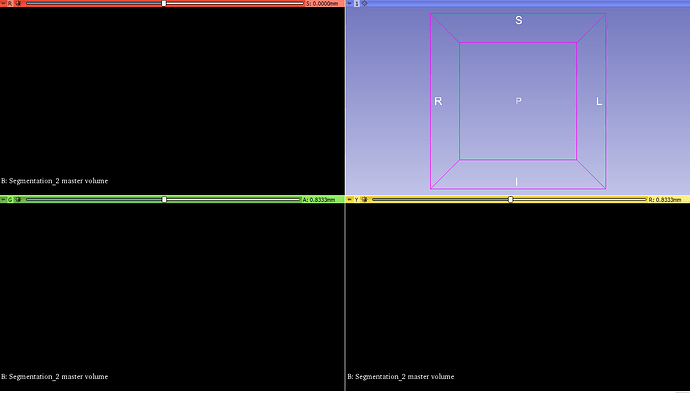

So this is what my window looks like just before I start oversampling.

Do you mean that oversampling slows down Slicer? That is expected. Oversampling factor of 2.0 increases memory usage and amount of computation by 8x, but if it makes you run out of physical RAM and your computer starts swapping, it won’t be just 8x slower but hundreds times slower.

If you cannot switch to a strong desktop computer with lots of RAM then I would recommend to always crop the volume when you resample, so that you keep the number of voxels about a few hundred along each axis.

How much RAM and what CPU do you have?

For larger volumes, it does lag slicer, but when the lag is no longer apparent, I end up with 3 blank panels like this (my computer doesn’t seem to slow down afterwards):

(so to clarify, this is post-oversampling)

Currently have a PC with 16 gb of RAM.

I’ve also tried to crop it down significantly and it gives me the exact same results just without the lag following the execution.

What CPU and graphics do you have?

Intel Xeon 6148 with 2.40 GHz and NVIDIA GRID M10-2Q

OK, this CPU, graphics, and RAM should be sufficient to handle something like CTChest oversampled 2x along each axis.

The blank image is just a misunderstanding. That is generated for your convenience when you specify geometry without selecting a master volume (for the case when you don’t have any input volume but you want to create a segmentation by just free painting). If you have a volume to segment then select it as a master volume.

Oh. Haven’t thought of that. Lemme try that out.

Hmmm… when I try to oversample the master volume, nothing happens (the spacing between each image is not reduced).

Can try these steps in a blank scene:

- Load MRHead sample data.

- Go to segment Editor and click on segment geometry button, and from the Source Geometry drop down choose ‘MRHead’. Set the oversampling to 2. Do you see something like this?

- Hit OK.

- Create a blank segment, and then threshold effect with 69.75-258.00 range and hit apply.

- Go to Segmentation Geometry again, and this time choose the segmentation as your source geometry. Confirm that you are seeing:

This oversampling of 2 for MRhead should not cause any noticeable delay or lag on your computer at all. If these work out, try with your data.

The strange part is I’m not able to threshold.

This is what I get:

Notice the threshold range is 0.

Also, to clarify, nothing changes when selecting the master volume as “MRHead” prior to oversampling.

You are doing something wrong. Your master volume should have been MRHead in this step.

First read the documentation about the segment editor

and then complete this tutorial

Thanks! It’s working now. Oversampling the segmentation works after applying threshold and then creating another threshold segmentation.

Also, are there any peer-reviewed journals or articles where the oversampling algorithm was first introduce?