Operating system: Windows 10

Slicer version: 4.10.2

Hello,

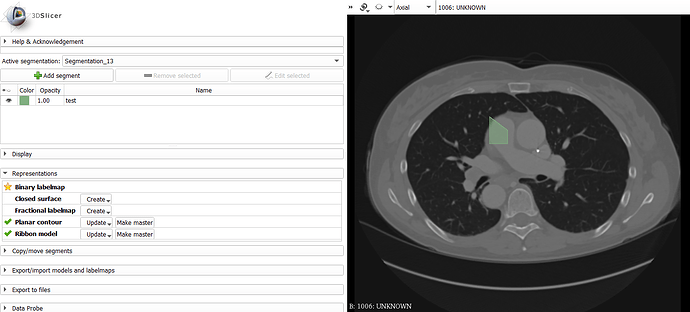

I have some manually drawn ROIs represented as points in the row, column, slice coordinate frame. I am trying to programmatically generate a vtkMRMLSegmentationNode from those points. I also want to be able to export a image that is 1 everywhere inside the polygon and 0 outside. Right now, the approach I am taking is as follows, with actual code below

- Turn the polygon coordinates (row, column, slice) into a binary numpy array that equals to 1 inside the polygon and zero everywhere else.

- Take the binary numpy array and put it into a vtkMRMLLabelMapVolumeNode

- Import that LabelMapNode into a vtkMRMLSegmentationNode

- Save the segmentation node as an RTStruct (not shown in the code because I’m doing it manually)

The problem I am having is with step 1 above. Although scikit-image and PIL have great functions to help convert polygon points into a binary map (e.g. skimage.draw.polygon) I cannot successfully install scikit-image or PIL into the Python interpreter. The rest of the steps 2-4 work fine, as verified by manually creating a box mask using numpy array slicing.

Does slicer already have a function that can convert a polygon to a binary mask? Or, alternatively, can I just somehow directly load the polygon coordinates into a segmentation node? The polygons are 3D (i.e. they span multiple rows, columns, slices).

Here is the relevant portions of my code:

#function createSegNode creates a segmentation node from a mask and name

#inputs

#mask: a 3D numpy array with 1s everywhere inside the polygons and 0s elsewhere

#name: name to assign new segmentation node

def createSegNode(mask,name):

#create volume node representing a labelmap

volumeNode = slicer.mrmlScene.AddNewNodeByClass('vtkMRMLLabelMapVolumeNode')

volumeNode.CreateDefaultDisplayNodes()

#place np array in volume node

updateVolumeFromArray(volumeNode, mask.astype(int))

#orient the labelmap in the right direction

volumeNode.SetOrigin(im_node.GetOrigin())

volumeNode.SetSpacing(im_node.GetSpacing())

imageDirections = [[1,0,0], [0,-1,0], [0,0,-1]]

volumeNode.SetIJKToRASDirections(imageDirections)

#convert labelmap into a segmentation object

segNode = slicer.mrmlScene.AddNewNodeByClass('vtkMRMLSegmentationNode')

slicer.modules.segmentations.logic().ImportLabelmapToSegmentationNode(volumeNode, segNode)

segNode.SetName(name)

#create a toy 2d polygon mask

r = [100, 150, 150, 100] #polygon rows

c= [100, 100, 150, 150] #polygon columns

m2=np.zeros((2,len(r)))

polygon_array = create_polygon([512,512], m2.T).T #I don’t have a good function to do this reliably. Right now I’m using a function I found on stackoverflow that doesn’t accurately reproduce the polygons

#put the polygon into a 3d array

im_node=getNode(“<< node name>>”)

node_np=slicer.util.arrayFromVolume(im_node)

polygon_array_3d=np.zeros(node_np.shape)

polygon_array_3d[20,200:250,200:250]=1

createSegNode(seg_d,seg) #calls above function