Operating system: Windows 11

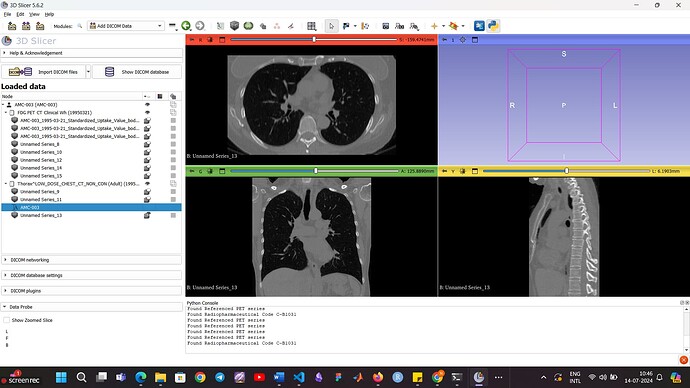

Slicer version: 5.6.2

Expected behavior: Quantitative Reporting should allow me to import AIM xml files

Actual behavior: It does not allow me to do that.

It would probably very easy to write an importer (a short Python script). What would you like to import and what would you like to generate from it?

So, I have tumor segmentations of a patient saved as XML files. I also have the complete PET/CT scans of the same patient. I want to load them both together to see the mask created. I’m just not able to do that.

I just started to use 3D Slicer, so I’m a complete beginner. I was watching some videos on how to load data, and I saw that you can load any data using the ADD DATA and ADD DICOM DATA too. If I select the directory(with all scans of a patient) using ADD DATA, all my file names are lost.

Now concerning the annotations, I try to load the xml file and place it under the patient itself. There is an option to ‘export to dicom’ and when i do that, this comes up.

Question is, how do I view the file on the scans themselves?

Want to view AMC-003 (xml file)

Thank you for any help.

AIM format has not been adopted by the medical imaging community. I am not aware of any software that supports it.

That said, since it is an XML-based format, which is human-readable, and it may still be possible to find some specifications online, it would be fairly straightforward to write an importer. If you have Python scripting experience (or willing to learn) then you can do it. You can use OsiriX ROI file importer as an example, which creates a segmentation from a set of parallel contours.

If you don’t have Python scripting experience or you would rather not spend time with this then you can contact Slicer Commercial Partners to develop it for you or you can try to hire a student or find someone in the Slicer community (by posting in the Jobs category) to help.

Hello. Thank you for the reply. I do have python experience. Seems like i’d have to work with a script then. The example is informative; I’ll try using it.

Thanks once again!

Hello. So i tried doing what you said. I wrote a script to extract the segmentations and converted them to nrrd format. One problem though which I tried solving using your answer here - How to find the image match between the external reference with the annotations and the sequence in 3DSlicer? - #4 by lassoan

The segmentation is in 2D (since I have only one AIM annotation XML file for a patient). So the doctor must’ve made a segmentation on one of the slices (123 here) and exported it if im not wrong. Now, I’m able to display the 2D segmentation on 3D slicer but the location is off. I tried using the header of just one dicom file (1-123 only) and this is what it gives

OrderedDict([(‘type’, ‘short’),

(‘dimension’, 3),

(‘space’, ‘left-posterior-superior’),

(‘sizes’, array([512, 512, 1])),

(‘space directions’,

array([[0.53320312, 0. , 0. ],

[0. , 0.53320312, 0. ],

[0. , 0. , 1. ]])),

(‘kinds’, [‘domain’, ‘domain’, ‘domain’]),

(‘endian’, ‘little’),

(‘encoding’, ‘gzip’),

(‘space origin’,

array([-131.23339844, -274.73339844, -140. ]))])

when I try to enter these values as the header for the 2D image, it obviously throws an error. Could you please tell me how to fix this? what space variables should I put for the 2d segmentation so that it loads in the correct position? When I plot it using plt.imshow() over the scan, it is fine.

Thank you.

Do you know which slice was annotated?

How did you compute directions and origin from the DICOM file?

Did you compute the nrrd file voxels from polygon points?

Yes, I know which slice was annotated. It was slice 123 (given in the AIM file).

I made a new folder with only that dcm slice and loaded it in 3D Slicer. Downloaded that nrrd file and got the header.

As for your last point, I didnt understand what you mean. Could you please explain what you mean by finding voxels from polygon points?

Also the segmentation is just a 2D circle, not a polygon.

If i simply save the label maps file as nrrd, it doesn’t have any space or space direction keys from inspection. Thats why I tried using the same slice’s header to make it work.

Thank you.

this was the function i created:

def create_label_map(annotations, image_shape):

label_map = np.zeros(image_shape, dtype=np.short)

label_value = 1

for center, radius in annotations:

rr, cc = np.ogrid[:image_shape[0], :image_shape[1]]

circle_mask = (rr - center[1]) ** 2 + (cc - center[0]) ** 2 <= radius ** 2

label_map[circle_mask] = label_value

label_value += 1

return label_map

Center and radius are manually calculated. I have x1,y1, x2,y2 values.

Slices are uniquely identified by SOPInstanceUID. Do you have that information? Relying on some index like 123 would be error-prone (it may look like an Instance number (0020,0013), but it may be some index into an array, but we don’t know the frame order in that array).

Can you look up the SOPInstanceUID in your annotation file?

If yes, then you can find the corresponding frame and use the ImagePositionPatient, ImageOrientationPatient, PixelSpacing attribute to get the position and orientation of the slice.

To get the slice spacing (third spacing value), you need to find the two closest slices (based on analysis of ImagePositionPatient, ImageOrientationPatient tags in all frames). Alternatively, you can create a markups json file that define the markups (ROI, plane, closed curve, …) in physical space.

This is what I found

<sopInstanceUid root="1.3.6.1.4.1.14519.5.2.1.4334.1501.553921625749272741224744327937"/>

I theorised that this would likely be the slice number from here.

<ImageAnnotation>

<uniqueIdentifier root="bh5cfg5vqmerg4db8coxnyhr05od845fsltt3gzb"/>

<typeCode code="LT1" codeSystem="Lung Template" codeSystemName="Lung Template"/>

<dateTime value="2014-09-03T18:07:05"/>

<name value="Lesion1"/>

<comment value="CT / THORAX LUNG 1MM / 123"/>

and here

<referencedFrameNumber value="123"/>

What would you mean by “closest?” Do you mean closest by value of both these parameters?

And what should I do after finding them exactly? Am I supposed to stack them and make a 3D volume nrrd file?

Okay, I shall try this out too!

Thank you.

This looks good, use this UID then. This uniquely identifies the frame.

Going from 2D to 3D in general is a very complex task, as it requires 3D reconstruction of a 3D image from 2D slices, when spacing between slices may be varying, slices may not even be parallel, and you can have many slices for the same position, potentially at multiple time points. However, if you only need to work with a specific data set and you know that slices are parallel and equally spaced throghout the entire series then it may be enough to find the slice in the image series that has the closest ImageOrientationPatient value, and use that distance as slice spacing.

You can also let Slicer do the 3D reconstruction and then create a segment using cylinder sources in physical space.

This looks OK, if center and radius are specified in pixels and pixel spacing is the same along both axes.

Okay. I will.

Ahh, I understand. I’ll try this. Hopefully it works!

My professor requires me to visualise already segmented lung areas so I don’t have to create one as of now.

Thank you so much for your time. Very grateful.