Meta’s Segment Anything Model 2 was announced last week. Are there any thoughts about its applicability to 3D images?

Maybe this is of interest:

Also this: [2408.03322] Segment Anything in Medical Images and Videos: Benchmark and Deployment

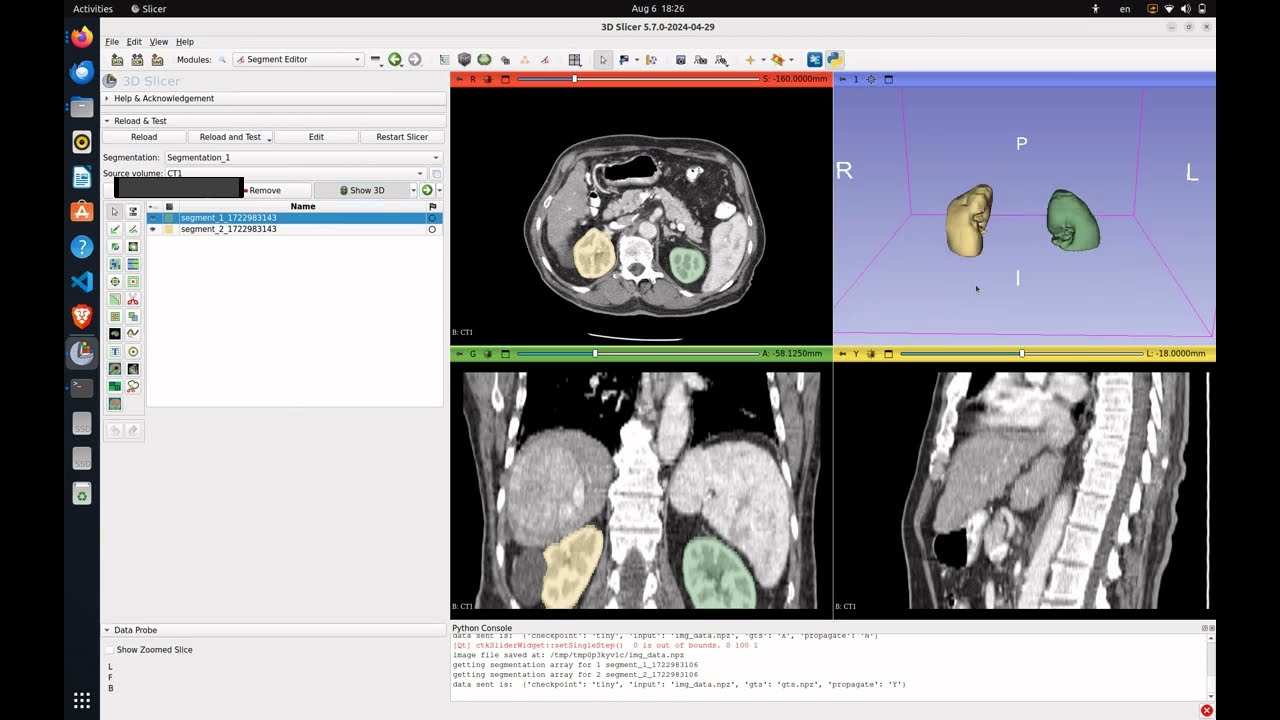

With a Slicer module! GitHub - bowang-lab/MedSAMSlicer at SAM2

I was aware of MedSAMSlicer, I didn’t notice they have a SAM2 branch ![]() Thank you for pointing it out.

Thank you for pointing it out.

They uploaded their preprint five days after the SAM 2 preprint…

Many people celebrate performance of SAM when they see some demo videos, but they don’t realize that they are looking at trivial segmentation tasks (e.g., kidney segmentation on a single CT slice where no similar structures are nearby) and they don’t notice when the segmentation spectacularly fails on moderately difficult tasks (such as missing half of the liver). See for example @alireza’s recent experiments with SAM2 for 3D medical image segmentation - with quite poor results: Alireza Sedghi on LinkedIn: SAM 2 Released: 3D Medical Image Segmentation Solved? I got a screen… | 18 comments

SAM performance is remarkable when used interactively on 2D images of everyday objects, the well-engineered web demos make SAM easily available for the crowds, and open-sourcing the model is examplary. It is also nice that it is a general-purpose tool that could be made to work on any imaging modality to interactively segment any structure. However, 99% users will not want any interactivity in the segmentation, and don’t want to work slice-by-slice; they just want fast, fully automatic 3D segmentation for free - and they can already get it via TotalSegmentator, MONAIAuto3DSeg, DentalSegmentator, etc. So, my overall impression is that considering their clinical relevance and impact, SAM-based models may not deserve as much attention as they are getting.

TotalSegmentator, MONAIAuto3DSeg, DentalSegmentator, etc.

Ground truth for training them needs to come from somewhere. SAM is a great interactive segmentation tool.

Yes, and this is its main limitation: it requires interaction. If the user needs to interact with the image for several (potentially tens of) seconds then the value is questionable, because the clinician can make the standard, well-proven measurements in about the same time, without 3D segmentation. Having a 3D segmentation may have some extra value, but the cost of required extra time of the clinician usually makes this a tough sell.

In contrast, fully automatic segmentation is clinically useful and impactful, because it both reduces the clinician’s effort and can provider richer results. Interactive segmentation tools cannot ever come close to automatic methods in routine clinical use.

Interactive methods may still play a role for research, in generating ground truth training data, or help with very difficult segmentations. But this is a very small arena, very crowded with various tools, and SAM-based tools do not seem to stand out in any way - they are not particularly well positioned for solving very hard problems, for doing 3D segmentations, for providing robust, bias-free, consistent, or anatomically correct segmentations.

SAM/SAM2/MedSAM/SAM-Med3D/etc. might find their niche where they can be useful in medical imaging, maybe in the future they can even carve out a larger area where they are successful. I guess I just don’t understand the excitement about them, when neither the current performance nor future prospects look so great. Anyway, if anybody can set up a nice 3D segmentation tool in Slicer based on SAM then let me know, I’ll try it and I’m ready to change my mind and will be excited and will happily advertise it if it works very well.

SAM is foreign to me, I won’t comment about SAM.

This means that clinicians want already processed data; i.e, results only. For decades, radiologists have provided that and are still doing that.

‘AI’ tools are trained with normal data and certainly provide ‘good enough’ results with new near normal data, though on a well defined ‘major organs’ target. ‘Good enough’ because they are far from being an immediate and doubtless reference for discrete measurements.

Clinicians deal with pathological situations that do not correspond to normal inputs. The spectrum of anomalous anatomies is very wide, near infinite. Feeding those ‘AI’ algorithms with such diverse anatomies would take more than infinite time… and funding that clinicians are not ready to provide.

To me, the current situation is that clinicians are having excessive expectations from ‘auto-tools’. Digital tools are not yet ready for effortless consuming in a click. Manual segmentation will prevail in the coming decade or more. The best bet for clinicians remains the radiologist if they find it too hard to invest in understanding and segmenting.

As for technologists, they should not over-sell an easy life to clinicians neither.

I agree with this comment. My experience with SAM has been less than ideal for real segmentation tasks. However, I can possibly see two specific cases that might be useful for SlicerMorph community:

-

We often work with organisms and scans where there is no standard anatomy, orientation and calibration, and there will be no automated tools (specifically ML tools) that will guide us. Often in those situations, users tend to do slice-by-slice segmentation with a lot of interaction anyways. So, if SAM can be integrated into Slicer as a segmentation editor effect, can be used like that, I am willing to give it a try.

-

We do have a very specific use case in which we need to remove background (which may or may not be very uniform) from 2D photographs of specimens taken at various angles to prepare them for 3D photogrammetry. Most of the time algorithms like SIFT does that OK programmatically, but sometimes “eats” into the specimen if the contrast is not high. If a SAM like tool can do this better with user guidance, then I am happy to try.

These are not sufficiently wide use cases and there are alternative solutions for them, hence I am not motivated to spend time and resources to work on the integration. But if someone wants to do it, and do it any robust way (I couldn’t even get start with the previous extensions, installation steps were not trivial), I am of course willing to try, use and promote it, if it works.

For awareness, here’s a new preprint by NVIDIA discussing this topic: A Short Review and Evaluation of SAM2’s Performance in 3D CT Image Segmentation | Abstract (arxiv.org)

Hey! I have followed all the instruction to download and setup medsam2 pluggin in slicer. But the slicer just dead between segmentation and downloading results. I see the cmd line of python server running just all right. I have tried different models and all failed. What could be the problem?

The slicer just dead like this:

This is what shown in my miniconda prompt

(base) C:\Users\dell>cd C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer

(base) C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer>conda activate medsam2

(medsam2) C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer>python server.py

* Serving Flask app 'server'

* Debug mode: on

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:8080

* Running on http://192.168.0.100:8080

Press CTRL+C to quit

* Restarting with stat

* Debugger is active!

* Debugger PIN: 477-329-984

127.0.0.1 - - [01/Jul/2025 15:15:32] "POST /upload HTTP/1.1" 200 -

infering img_data.npz

C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer\sam2\modeling\sam\transformer.py:270: UserWarning: Memory efficient kernel not used because: (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen\native\transformers\cuda\sdp_utils.cpp:773.)

out = F.scaled_dot_product_attention(q, k, v, dropout_p=dropout_p)

C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer\sam2\modeling\sam\transformer.py:270: UserWarning: Memory Efficient attention has been runtime disabled. (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen/native/transformers/sdp_utils_cpp.h:558.)

out = F.scaled_dot_product_attention(q, k, v, dropout_p=dropout_p)

C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer\sam2\modeling\sam\transformer.py:270: UserWarning: Flash attention kernel not used because: (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen\native\transformers\cuda\sdp_utils.cpp:775.)

out = F.scaled_dot_product_attention(q, k, v, dropout_p=dropout_p)

C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer\sam2\modeling\sam\transformer.py:270: UserWarning: Torch was not compiled with flash attention. (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen\native\transformers\cuda\sdp_utils.cpp:599.)

out = F.scaled_dot_product_attention(q, k, v, dropout_p=dropout_p)

C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer\sam2\modeling\sam\transformer.py:270: UserWarning: CuDNN attention kernel not used because: (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen\native\transformers\cuda\sdp_utils.cpp:777.)

out = F.scaled_dot_product_attention(q, k, v, dropout_p=dropout_p)

C:\device\researches\Hepatoma\MRIV\MRIV - Code\SAM2\MedSAMSlicer\sam2\modeling\sam\transformer.py:270: UserWarning: Expected query, key and value to all be of dtype: {Half, BFloat16}. Got Query dtype: float, Key dtype: float, and Value dtype: float instead. (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen/native/transformers/sdp_utils_cpp.h:110.)

out = F.scaled_dot_product_attention(q, k, v, dropout_p=dropout_p)

C:\Users\dell\miniconda3\envs\medsam2\Lib\site-packages\torch\nn\modules\module.py:1747: UserWarning: Flash Attention kernel failed due to: No available kernel. Aborting execution.

Falling back to all available kernels for scaled_dot_product_attention (which may have a slower speed).

return forward_call(*args, **kwargs)

Middle Slice Mask Calculated

127.0.0.1 - - [01/Jul/2025 15:15:38] "POST /run_script HTTP/1.1" 200 -

INFO:werkzeug:127.0.0.1 - - [01/Jul/2025 15:15:38] "POST /run_script HTTP/1.1" 200 -

see also in Issues · bowang-lab/MedSAMSlicer

Hi!

Maybe SAM 3D can help you nowadays to remove background easily to help you with your SFM Photogrammetry work.

I have’t used it yet, but it sounds very promising, I don’t know if it works with batch editing.