Hi @MPhilip

Our training material/tutorials were mostly designed to teach our tools to medical professionals who are familiar with tracing lesions using standard clinical tools/viewers and who have the medical expertise identifying lesions. I’m not sure if I can teach you all of that, but I’ll try my best helping you with your questions:

In the case of a disconnected blob or elongated lesion…

You have to split such a case into regions that you segment separately.

I felt that it is taking some time to locate the tumour on different slices/views

Once I find the lesion in any of the 3 (red/green/yellow) slice views, it takes me just a few seconds to find axial/coronal/saggital slices close to a lesion center. Using the “Slicer Intersection” is really very helpful in this process:

Turn it on via the Slicer main tool bar:

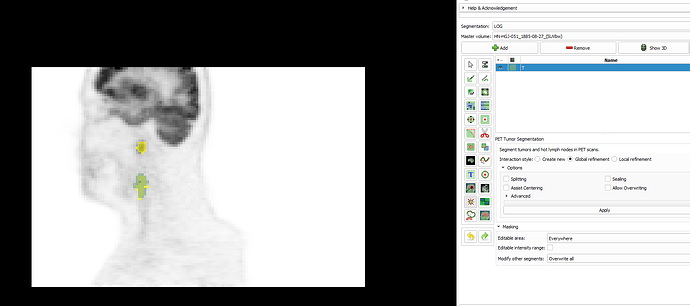

Example: Initial view of PET scan with a lesion and slice intersections turned on:

Find lesion in one of the views; e.g. the yellow one in this case. Then move mouse cursor there while holding the shift-key down. When you’re there, release the shift-key. This will get the red and green slice to show the lesion as well.

In the red/green slices you can see that the yellow slice is not close to the lesion center. Move it closer to the center by again holding down the shift-key and moving the mouse cursor to the center of the structure in the red or green slice. Now we have all views close to the lesion center:

Was this the 3D view(shown below) that will give an overall picture of the tumour before using any semi-automatic segmentation tools?

I think to get a complete picture of the lesion and segmentation you need to move through all the slices it intersects, preferably in all 3 anatomic planes.

The volume appears smaller than the manually segmented tumour. Can it be improved or is this just right?

When we designed the algorithm, we adjusted it to reflect the tracing behavior of our most experienced radiation oncologist (where he would set the boundary based on properties of the lesion and its surrounding area). But different medical experts have different preferences; some draw the contours slightly larger or smaller than others.

If based on your medical experience you think that after the initial segmentation the overall contour is too small, you can use the “global refinement” option to adjust the gray-value level obtained by the algorithm. Switch to this option and click in the PET scan on a location for the surface that reflects the gray-level you think is right. The whole surface will adjust to this gray-level.