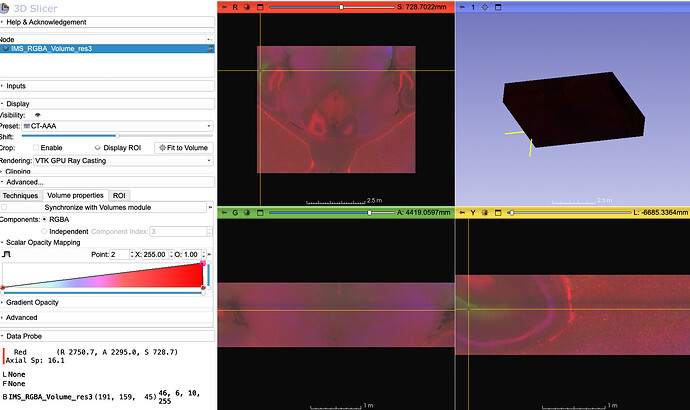

I am trying to import some multichannel microscopy data into Slicer. I can get the RGBA volume loaded and shown correctly in the slice views. But when I volume render it (by dragging and dropping into the 3D view), all I get is a blackbox. Using the slider, seems have no effect (or rather the only effect I get is box disappears). Switching to individual components doesn’t seem to help either. I remember being able to render RGBA images before. Is this a regression, or this about the data? Here is the link to the data: Box

The issue is that your alpha is 255 everywhere, so the block is the correct rendering.

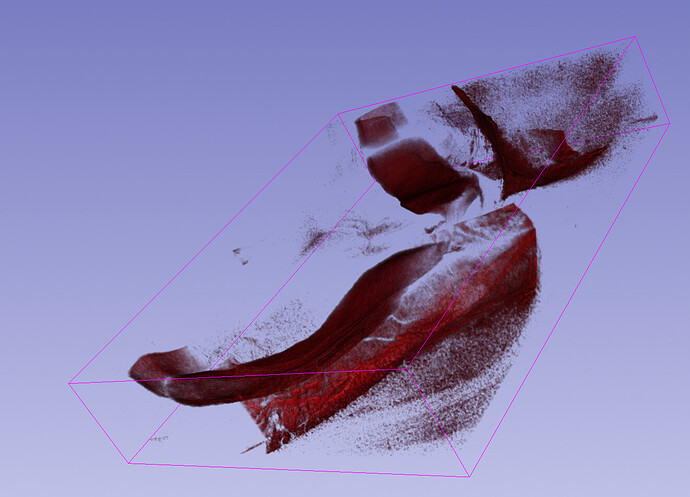

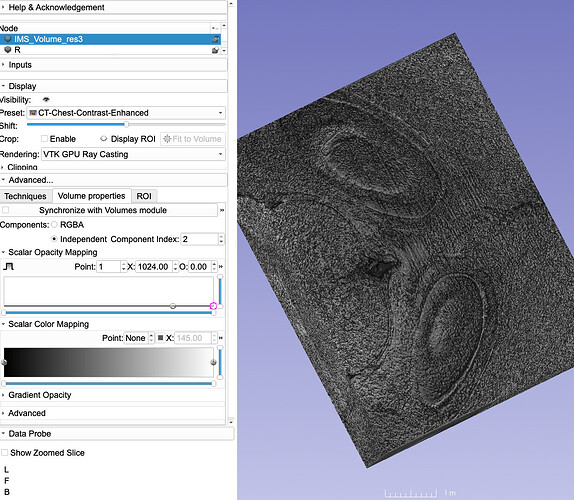

If you make the alpha into something meaningful then you can look at different structures. E.g. here I’ve made alpha be the red channel:

>>> a = arrayFromVolume(getNode("IMS*"))

>>> a[...,3] = a[...,0]

>>> arrayFromVolumeModified(getNode("IMS*"))

>>>

And here the blue:

Thanks @pieper. What is a meaningful alpha in this case, 0.5? I want to see it as RGB as opposed to individual ones.

I’m not sure, you may need to experiment. If alpha is constant it will be like the block you had before. Ideally you would make an alpha channel that identifies a structure or region of interest and then the color would be some other information, something simple like 0.2r + 0.7g + 0.1*b (brightness) would be good for visible light, but I’m not sure that’s what you want here.

>>> a = arrayFromVolume(getNode("IMS*"))

>>> a[...,3] = .2 * a[...,0] + .7 * a[...,1] + .1 * a[...,2]

>>> arrayFromVolumeModified(getNode("IMS*"))

An average of all three might be good. This basically makes the black background transparent and renders only where there’s “signal” in the images.

>>> arrayFromVolumeModified(getNode("IMS*"))

>>> a = arrayFromVolume(getNode("IMS*"))

>>> a[...,3] = .33 * a[...,0] + .33 * a[...,1] + .33 * a[...,2]

>>> arrayFromVolumeModified(getNode("IMS*"))

Himm, does that mean this needs to be some kind of user input during the rendering that is adjusted real-time (how much emphasis on R, G, B)?

We could imagine adding something like that, yes. It’s probably application specific. In something like CT is it customary to use x-ray density as an implicit or explicit alpha channel. But you may also want something like a gradient opacity expressed in your alpha. Or if this is some kind of tagged staining microscopy it could be that one channel makes more sense to use as alpha.

What actually is your data and what are you trying to see in it?

It is not specific to anything. I am trying to convince the microscopy group here to use Slicer more for their basic visualization needs. For that I created a simple module that reads their Imaris format and seem to work adequately. When it comes to visualizing RGB, there are couple issues:

- Can split the channels individually and assign manual Luts (R, G, B). that works well but it breaks when I want to simultaneously render them. Multivolume renderer doesn’t seem to support more than two (and is missing features anyways), and if I use the GPU raycasting, the depth is not preserved correctly.

- It is not clear to me whether 16bt per channel RGBA images are supported. It looks like original data is 16 bit per channel (at least raw value are in excess of 256). I am having to do 8 bit conversion to get it displyed in slice views (otherwise slice views are also black).

Yes, I’m sure there are limitations to rgba mode. Remember it was not working at all at the VTK shader level until I fixed it for the ColorizeVolume module. There could be other limitations that assume RGB will always be 8 bit, but I think in principle the mode should be supported so probably any fixes would be merged.

But my point is that alpha doesn’t have a fixed meaning for all purposes, so some amount of interface and flexibility would be required.

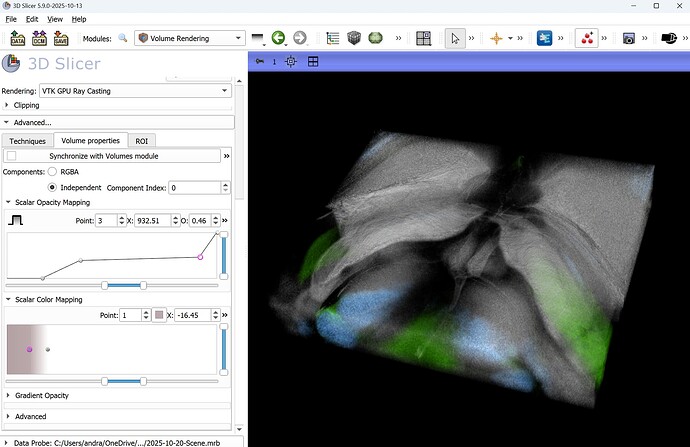

With @Sunderlandkyl we recently added multi-component volume rendering for SlicerHeart (to show B-mode + Doppler images). This is very well applicable to any multichannel images.

The image that you provided had 3 useful channels, the first one seem to be anatomy, while the other second and third have signals in some smaller regions.

I used a gray color transfer function for component 0, some colored ones for 1 and 2, and all transparent for 3 (because it was just a solid block):

There was no need for any data manipulation in Python, it was all done in the GUI.

Up to 4 channels can be rendered like this independently - you can specify opacity and color transfer function for each channel. If you have more channels then you would need to preprocess the data - either select certain channels or combine multiple channels into up to 4 channels that you render independently or as an RGBA color volume.

That’s cool @lassoan and @Sunderlandkyl ![]() I hadn’t seen that mode before. That’s the point I was making that these channels are really RGB in this interpretation, more like [alpha, stain1, stain2]. If that’s really the way they did the acquisition this is the sort of interface they probably want.

I hadn’t seen that mode before. That’s the point I was making that these channels are really RGB in this interpretation, more like [alpha, stain1, stain2]. If that’s really the way they did the acquisition this is the sort of interface they probably want.

This is great. It is working for me too!

If this the case, do we still need RGBA images, or can I just import them as RGB? Also do you know whether 16 bit per channel is supported?

No need for manual generation of an alpha channel.

- If you load an RGB volume then you can render it as a color volume (alpha channel is generated automatically as the average of the RGB channels).

- If you load a multichannel image then you can render each channel independently.

16-bit per channel is supported. You can render images consisting of 8 to 64 bit integer or floating-point voxels, up to 4 components.

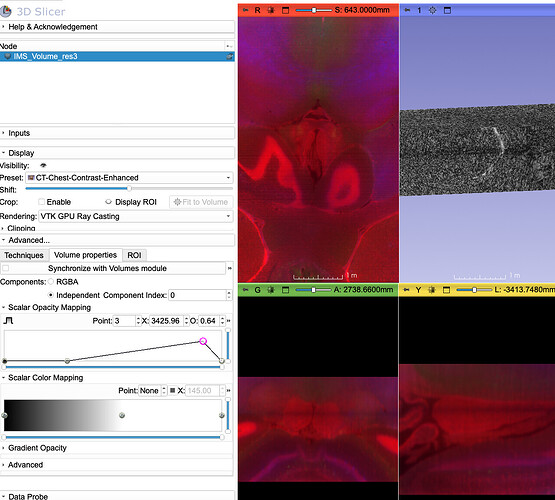

I think there is an issue with the independent component’s (specifically index 1 and 2), as the Scalar Opacity Mapping range does not go beyond 1024, it appears hardcoded limits (I can’t enter a higher value manually either). It doesn’t happen for component 0, that one automatically gets the full range of intensities in the data. As a result by rendering is blocky, since I need values around 1300 range to remove the noise.

In fact I flatlined scalar opacity mapping of all three components (they are all zero opacities), but I am still seeing this block. First channel has intensities 154-3734 range, second 46-7158, and third 75-7689

@Sunderlandkyl @lassoan any thoughts on this?