For scalar (not RGB) volumes

You can copy the color transfer function to a color node by copy-pasting this into the Python console:

volumeRenderingPropertyNode = slicer.mrmlScene.GetFirstNodeByClass('vtkMRMLVolumePropertyNode')

colorNode = slicer.mrmlScene.AddNewNodeByClass('vtkMRMLProceduralColorNode')

colorNode.GetColorTransferFunction().DeepCopy(volumeRenderingPropertyNode.GetColor())

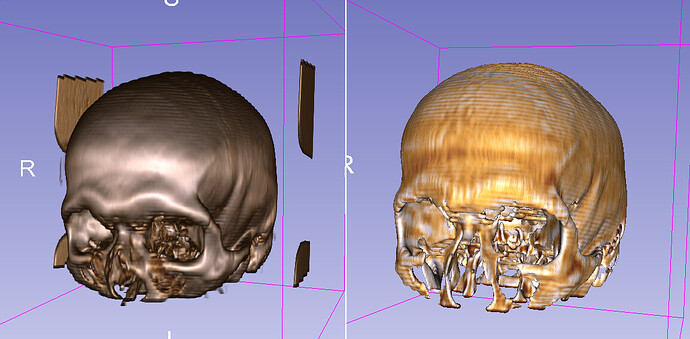

However, as I wrote above, do not expect surface rendering to even remotely similar to volume rendering, but something like this instead (left: volume rendering; right: surface rendering of probed surface):

The reason is that the texture/discoloration in the volume rendering is due to the cloud of lower or higher intensity voxels around the isosurface value, while in case of surface rendering the discoloration mainly comes from image interpolation artifacts (because if you segment by thresholding then you ideally get a surface where all the points have the exact same scalar value and any difference is due to small interpolation errors).

If you want a little bit more similar results then you can apply some Gaussian smoothing (using Gaussian Blur Image Filter module) to the input volume before you probe the volume with the model (that makes surrounding regions somewhat influence each point’s intensity):

For RGB volumes

If you have RGB volumes then you don’t need a colormap but you use direct color mapping. The fundamental difference between volume rendering/surface rendering still applies, and you’ll basically get a uniformly colored surface if you create segments by thresholding.

You must have chosen a wrong volume when you used Probe volume with model (not the RGB volume) if it came out like that in your screenshot. Try the probing again and if you cannot figure out what’s wrong then upload your scene as a .mrb file somewhere and post the link here.

I get this with the default red-green-blue colormap:

And this is what I see when I switch to direct mapping:

I’m not sure what your goal is, but I don’t think you can get realistic surface textures from the color in these cross-sectional images. If you want to see nice surface texture then it is better to take a photo of dissected organs and apply that texture to surface models.